LinkedIn Verification: what is it and should B2B marketers care?

With great power comes great rewards

Reading: 0118 322 4395 | Manchester: 0161 706 2414 | Oxford: 01865 479 625 | info@sharpahead.com | Office hours: Monday-Friday 9:00am - 5:30pm

| Office hours: Monday-Friday 8:30am - 5:30pm

| Email | Office hours: Mon-Fri 9:00am - 5:30pm

Subheading

Last month I looked at how Microsoft’s chatGPT-powered search engine performed for B2B research. I was pleasantly surprised at how useful it was. Read the full blog article here.

This month I’ve applied the same structured research and evaluation process to Google’s equivalent, Bard.

I can’t sugar coat this. Bard is currently pretty terrible at B2B research – at least, for the types of question I tested.

Google is up front that Bard is still a prototype, and we can expect it to change and evolve at a rapid pace. But as it stands today, I don’t see much use for Bard in B2B research. Bing Chat is far superior.

Here are a few examples to show what I mean.

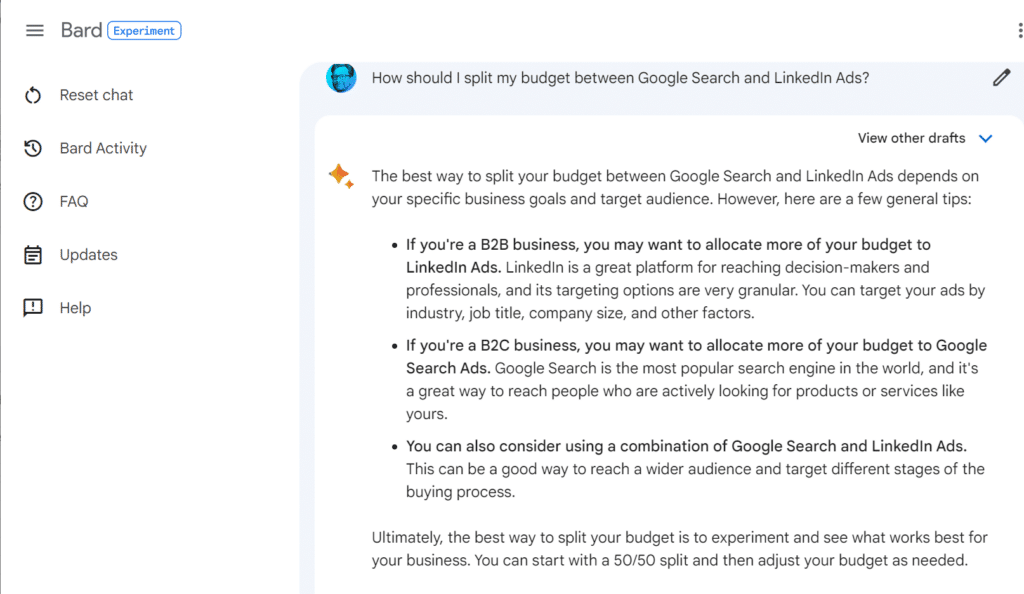

Bard did OK with the basics here. I noticed two big differences in comparison with Bing Chat:

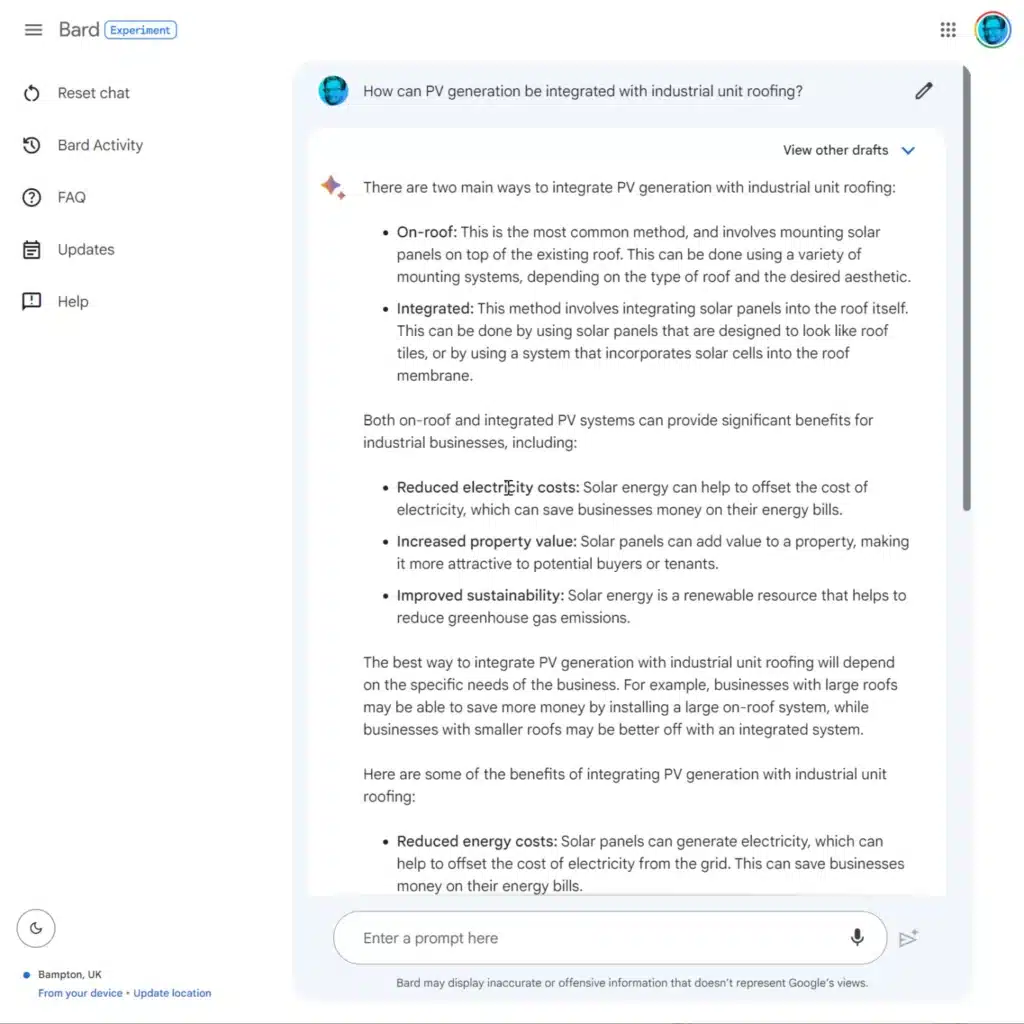

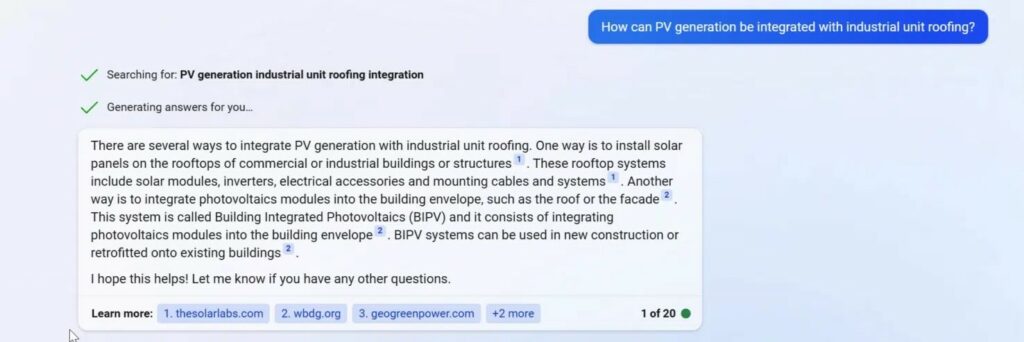

Bard also had a habit of offering information that didn’t directly answer my question. For example when I asked “How can PV generation be integrated with industrial unit roofing?” Bard gave a good basic answer, but then rambled off to talk about the benefits of solar power. To me, it’s implicit in my question that I already know quite a bit about solar power, and that I’m going to be looking for something specific. So this extra off-topic content doesn’t add value. Bing Chat was typically much more focussed. Here are the Bard and Bing results side by side for comparison:

Again Bard’s answers weren’t very focussed and didn’t respect the specific context implicit in my question. For example when I asked Bard “What’s the most comprehensive source of construction industry leads in the UK?” it gave me a slightly useful answer, but then suggested I might want to try going to conferences or networking to get leads!

Bard really lost the plot here on several queries. For example when I asked “Is the Curious Lounge a high quality coworking space?” it hallucinated several plausible-looking but completely imaginary reviews.

It’s an uneven contest at present.

Bard rambles, forgets or ignores important context, and – worst of all – Just Makes Sh*t Up when it doesn’t know the answer. Bing Chat, in comparison, stayed focussed and was honest about its limitations.

I really can’t recommend Bard in its current form as a B2B research tool, and I don’t think we’ll see any great takeup of it in the B2B world unless or until Google improves it. For now: stick with Bing Chat for your B2B research.

But there is so much at stake here for the search engine giants that I’m sure we WILL see great improvements in Bard and other tools. We’ll keep testing and reporting on progress!

Given the significance of this technology change you can be sure we’re going to keep a close eye on developments with generative AI-powered search in the future. So sign up for our email newsletter and keep an eye on our blog to stay in the loop!

If you have questions about how Google’s new AI Chatbot might affect your business, or simply want to continue the conversation, please get in touch!

With great power comes great rewards

EEATing for breakfast

The Ads Challenge Google vs Microsoft

B2B Digital Rocket Fuel straight to your inbox

Add your email address below to receive our biweekly newsletter and stay up to date with the latest B2B digital marketing news and insights.

You'll also get instant access to our growing catalogue of marketing resources.

“An invaluable resource for getting the latest and greatest ideas and tips on B2B digital marketing. My students also benefit from the industry insights.”

Louize Clarke, Founder, The Curious Academy

Reading Office

The Curious Lounge,

Pinnacle Building,

20 Tudor Road,

Reading,

RG1 1NH

0118 322 4395

reading@sharpahead.com

Manchester Office

CORE,

Brown Street,

Manchester,

M2 1DH

Oxford Office

Oxford Centre for Innovation,

New Road,

Oxford,

OX1 1BY

01865 479 625

oxford@sharpahead.com

© Sharp Ahead | VAT: 184 8058 77 | Sharp Ahead is a company registered in England and Wales with company number 08971343

Or contact us directly on

01865 479 625 or

info@sharpahead.com

Office hours: Monday – Friday 9:00am – 5:30pm